The google search console (GSC) was developed to help website owners monitor their traffic and search performance. It’s such a valuable source of information as it provides real data on how your users interact with your website in the SERPs!

There are tons of different tools for analyzing search performance and tracking your rankings, but a lot of these tools use scraped data, also known as third party data. Some use a mix, and some, like Ryte, just use GSC data. In this article, we explain the differences between third party data and GSC data; give some recommendations about where to use each one, and bust a few myths about GSC data.

Contents

Table of Contents(toc)

What is the difference between scraped data and GSC data?

Third party data or scraped data is data that is "scraped" from the search engine result pages with a bot. The way in which the data is retrieved means that it doesn’t provide any information about user behaviour or user interactions with your site - it can only give you an idea of your website’s visibility in the SERPs.

An important point to note is that retrieving data in this way does not conform to Google’s guidelines. This is demonstrated nicely in a tweet from a few years back from John Mueller.

This can lead to all sorts of problems. Scraped data providers are at risk of being shut down by Google, which makes scraped data as a source unreliable and unsustainable.

The Google Search Console uses direct user data from the search engine results pages. That means the information you see with the Google Search Console actually reflects how users are interacting with your website in the search results.

The Google Search Console provides the information "clicks", "impressions", "CTR" and "position". Clicks is the number of times your website has been clicked on by users. The most valuable metric for understanding user behaviour is clicks, as this shows a concrete interaction between the user and your website. Click data isn’t available with tools that use third party data - meaning CTR data is also not available.

Advantages of Google Search Console over scraped data

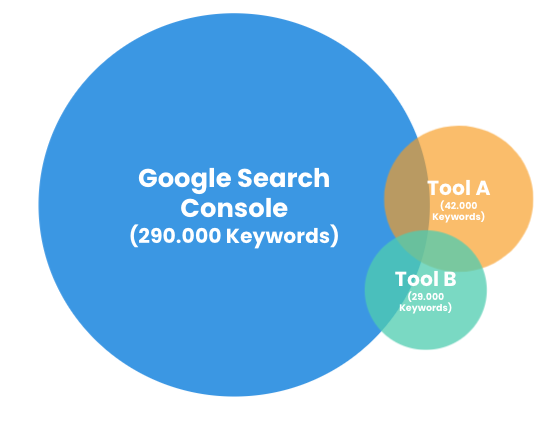

One of the biggest advantages of Google Search Console data (other than that it is Google conform) is that it simply provides more data. This diagram shows the amount of keyword data found in Google data compared to scraped data tools.

The corona crisis has also shown how important it is to work with GSC data. As GSC is the most holistic source of data, GSC data clearly helps you discover new keywords your website is ranking for. This has been particularly useful for websites during the crisis, as website owners can easily and quickly discover new questions that have arisen as a result of the crisis.

So when it comes to in depth analyses of your search performance, we definitely recommend using Google Search Console!

When should you use GSC data and when should you use third party data?

Of course, neither is perfect. It really depends on the task you want to complete.

Competitor analysis

Competitor analysis is only possible with scraped data. You can only use the Google Search Console for your website – and you have to verify this, so it isn’t possible to set up a Google Search Console account for a website that isn’t yours. This means you cannot carry out extensive competitor analyses with the GSC. For competitor analysis, and competitor research, scraped data or third party data is necessary. There are loads of tools that are really great for this.

Keyword monitoring

GSC is the better choice for keyword monitoring. GSC uses actual user data therefore provides you with a more accurate and reliable picture of how your keywords are performing. Moreover, the Google Search Console has much more data than scraped data tools.

There are some limitations of GSC for keyword monitoring. There are limited filtering opportunities, and you can only see 1000 rows of data. This could mean you miss out on some valuable keywords that may have great potential but just aren’t good enough to make it into the top 1000. That’s where tools like Ryte can help. Ryte imports all the keyword data from GSC, provides you with great filtering opportunities, and actionable reports so you can really get the most out of the data. What’s more, with Ryte, you can even set up keyword alerts meaning you get emails when keywords gain or lose in clicks, impressions, CTR, or position.

Reporting, or detecting anomalies in your search performance

GSC data is more useful for analysing data, reporting, or detecting anomalies in your search performance. Tools that use third party data are very fixed on a proprietary and artificial metric they refer to as "visibility" which in theory translates into impressions – the metric that shows how often your site is seen by a user in the SERPs. But in practice, neither does this metric actually correlate with your actual impressions, nor is it as useful as clicks: you want a user to click through to your site and convert. Losing impressions (which can be the case in a Google Update) isn’t as important as losing clicks, but a tool that’s using third party data will show you a big drop in visibility, which simply isn’t accurate.

Busting myths

We’re not here to say that third party data is bad. As mentioned above, it can be really useful when carrying out certain tasks. Overall, we advocate using a mixture of both GSC data and third party data.

However, whilst we don’t want to talk negatively about scraped data, we also want to make sure people understand the value of GSC data! There are some myths floating around about GSC data and we want to make sure everyone knows the full story.

Myth #1 - Competitor comparison is necessary to discover best performance

Comparing yourself with competitors is the only way to discover your best performance.

Fact

It’s true that third party data is better when it comes to analysing or researching your competitors. But do you really need to look at your competitors to assess your own performance? Why not evaluate how you as a business have improved in the past years? Comparing your search performance year-on-year is a great way of doing this.

Also, whilst third party data provides some insights into your competitors, it’s very limited. Third party data providers only look at rankings, so have no information about clicks. Clicks are actually more useful, as they show who has actually engaged with your site.

Myth #2 - Third party data collection is better than that of the GSC

The way scraped data providers collect data is better than in the Google Search Console. Google only takes pages & keywords into account that are actually used. If a page is not seen or clicked on, no data is collected.

Fact

It seems logical that Google only collects data on pages when they are seen or clicked on by actual users. If there isn’t a single request for a search term, why is it relevant to you? It shouldn’t be! You should be creating content for users that is being searched for. If no-one is searching for the content, it isn’t relevant. Focus on the keywords that are actually causing your users to engage with your content!

Still, in Ryte Search Success you can add keywords into Keyword Monitoring you are not getting any searches for yet. This way you can see over time which of them gain in traffic and rankings.

Myth #3 - Good weather leads to different data

If the weather is good, people spend time outside, not looking at the search engine result pages. This is also the case in other seasons, like Christmas holidays. This affects the data.

Fact

Of course there will be some seasonal fluctuation, but this doesn’t impact your rankings! There may be fewer searches by users, which means you might get a dip in impressions and clicks, but this doesn’t affect rankings. If you want to see a KPI that is less likely to fluctuate, this is where Ryte can help. Ryte shows you the number of keywords you’re ranking for. This is less likely to be impacted by seasonal fluctuations than clicks and impressions.

Myth #4 - No comparison of mobile and desktop rankings are possible

You can’t properly compare mobile and desktop data in the Google Search Console because of the significantly different user behaviour. A smartphone user may be less likely to click on the 2nd or 3rd pages of the SERPs.

Fact

Actually, there is no better source than GSC for this kind of data because it is much more holistic in terms of how much data is actually available compared to third party tools. Moreover, you see how many desktop and mobile clicks your website gets simply by setting a filter for mobile or desktop. It isn’t necessarily displayed in the best way to compare, but this is where other tools (like Ryte) can help.

Myth #5 - Ranking distribution cannot be relied upon

There is no reliable ranking distribution because data is only collected when a searcher accesses the page - meaning results are skewed more towards the first pages where more user interaction takes place.

Fact:

If the users do not engage with your content, is this really relevant? It makes sense that more weighting is given to pages that encourage greater user interaction.

Myth #6 - Incorrect view of problems and opportunities

It’s hard to see problems and opportunities. Google doesn’t measure the interesting keywords that rank lower down. If a user doesn’t click on your keyword on page 9, it won’t be reflected in the Google Search Console.

Fact

Would you really consider a ranking on the 9th page of any relevance to you? If a user has seen the search result for a keyword, it is registered by Google Search Console. These are the keywords you want to focus on that provide you with opportunities.

Myth #7 - GSC does not show data for lost keywords

Keywords that ranked previously but are not ranking anymore will not show up in Google Search Console data.

Fact

This one isn’t quite a myth - it is true. But, this would only happen to keywords that have so few impressions (2-3 per day) that they are most likely totally irrelevant for you to monitor anyway! For your most important keywords that drive traffic, the GSC has consistent data and can fully fulfil that purpose. Moreover, if these keywords are still important to you, you can view them in tools like Ryte, which has a report for your "lost" keywords. You can also keep monitoring these lost keywords using our Keyword Monitoring, to see whether you gain traffic and rankings again in the future.

Myth #8 - No transparency due to data consolidation

There is no transparency due to data consolidation - as Google consolidates data based on the canonical URL in an HTML page which can be error-prone for larger websites.

Fact

Google does consolidate data, but these will be reflected in the search results sooner or later anyway, meaning scraped data providers do not provide better data here.

Myth #9 - Delays in obtaining GSC data

There is a delay in Google Search Console data, meaning you aren’t working with the most current data.

Fact

This was true, but not any more! The GSC data that’s provided via their API now provides partial data from the previous day. What’s more, third party data providers run into problems extracting data at scale, therefore the data is usually even older (1 week or more).

Conclusion

Hopefully reading through these facts shows that the Google Search Console sometimes gets an unfair reputation. The Google Search Console isn’t perfect, but it is the most holistic and reliable data source available for conducting deep analyses into your search performance. And don’t forget that scraped data goes against Google’s guidelines, which makes Google Search Console data simply the most reliable source.

Both scraped data and GSC data are needed when analysing organic performance, but competitor analysis tasks are nowhere near as frequent as your own monitoring and reporting. It’s important to have a strong and reliable system in place so you’re aware when things go wrong, and can find out what actually happened.

For the best results and to cover more bases, you should be using both Google Search Console data and scraped data as they’re useful for different purposes. Just try and make sure you’re using the most suitable data for the task you’re trying to carry out :)

You can’t rely on just one platform for everything! If you want to be the best, you have to use the best data.